What is AWS ECS?

Amazon Elastic Container Service (Amazon ECS) serves as a comprehensive container orchestration solution, offering seamless deployment, management, and scaling capabilities for containerized applications. Operating as a fully managed service, Amazon ECS incorporates AWS configuration and operational best practices into its framework. It boasts integration with both AWS and external tools like Amazon Elastic Container Registry and Docker, streamlining the development process by allowing teams to concentrate on application building rather than environment setup. With the ability to run and scale container workloads across AWS Regions in the cloud and on-premises, Amazon ECS eliminates the need for intricate control plane management.

AWS ECS Key Takeaways

- ECS, or Elastic Container Service is based on Docker. Docker is a containerization technology that is based on virtualization principles and provides a streamlined approach to deploying applications.

- ECS is powered by a variety of technologies, one of which is Docker Images. Images are snapshots of applications and services that can be deployed onto containers and AWS services via ECS.

- Fargate is a serverless service that pairs nicely with ECS. Serverless architectures aren't technically without servers. They're simply obstructing the complexity of managing hardware and make it seem to the user that they're working exclusively with deploying software and need not to worry about server hardware.

- Deploying an ECS cluster is straightforward. You'll need to specify the settings for the cluster and underlying services - predominantly EC2 instances that will be running underneath.

ECS Fundamentals | Understanding Docker and Virtualization

Before we dive into the discussion of AWS ECS, it’s important to have a clear picture of virtualization and the most utilized tool within the industry for virtualization - Docker. In this section, we’re going to provide you with a brief overview of both in an effort to review the fundamentals and ensure that we’re all on the same page of understanding.

What is Docker?

We’re all familiar with a personal computer - a device that runs an operating system and a set of applications that allow us to accomplish a variety of tasks (email, web browser, video games, etc.) A personal computer contains key components that allow the user to run these applications - CPU or Central Processing Unit, GPU or Graphics Processing Unit, RAM, or Random Access Memory, Networking Interface, and more. A virtual machine is an application that will be “permitted” or allocated a portion of the resources of a computer and run the software as a seemingly separate system. In other words, a virtual machine is software that utilizes a portion of the hardware of the host computer.

There are multiple tools that can be used to create virtual machines - Ex: VirtualBox, Parallels, VMware, Citrix, and more.

Docker isn’t a virtual machine software.

Docker provides a way for the user to efficiently virtualize applications while utilizing the key components of the Operating System (OS) which saves a lot of resources and leverages the benefits of virtualization. In other words, Docker has revolutionized the way we virtualize software and is currently one of the core technologies that manage the infrastructure upon which most modern applications are deployed.

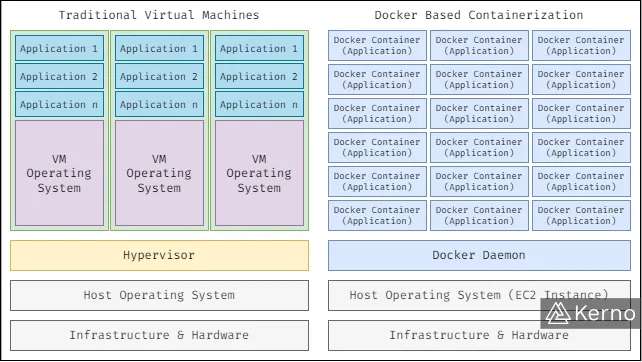

As shown in the first above, there’s a clear advantage of using Docker over traditional virtualization options. In the “stack” on the left side of the diagram, you’ll notice that the host is able to support three virtual machines. Each one of those machines has been allocated resources that run the Operating System (OS) and the underlying applications. On the right hand side, you’ll notice a diagram that represents the Docker containerization. The immediate difference you’ll notice is the amount of applications that run on the host. Docker will utilize the components of the host Operating System instead of replicating them. This leads to those applications running within their own environment that doesn’t need a copy of the OS.

Understanding Docker Images

Docker is versatile - the user can choose from a variety of applications available through Docker Hub, or to create their own. For obvious reasons, engineers will leverage applications they can find on the Hub, and build their own when deemed necessary by the business. In almost all scenarios, solutions will require engineers to modify applications as they mature and are used to solve additional business problems - this leads to new versions. Application versions can be managed via different tools; most commonly Git. Docker works well with version control and can be tied into monitoring the applications running on the engine and updating accordingly.

A Docker image is a snapshot of the set of applications running within the container. An image will save all the software, including the configuration making it easier to deploy on other systems. This is a key advantage of Docker - the engine takes care of the OS requirements so that the same application runs on Linux, Windows, MacOS, etc.

The figure above illustrates the process of creating, storing, and what’s contained within a Docker image. The first step in creating a Docker image is specifying a Dockerfile. This file is written in YAML format and outlines the settings to be used for a specific container. An image can be stored and retrieved from a repository designed for Docker images. As discussed above, Docker Hub is one of the most popular repositories. However, Docker Hub is managed by Docker. In some scenarios, you’d want to manage your own images. One of the options to do so is through AWS ECR, or Elastic Container Registry. Both accomplish the same task - storing Docker Images, keeping track of different versions of these images, and being a resource from which the user can pull images.

Docker on AWS

Now that we have a general understanding of Docker and Docker Images let’s briefly discuss how it pertains to AWS and the services that run on top of the infrastructure. As Docker is a critical component of most modern applications, there are multiple services that extend its functionality or provide a way to utilize containerization within the AWS infrastructure.

Amazon Elastic Container Service (AWS ECS)

Amazon ECS is a container platform allowing users to deploy Docker images onto EC2 instances. AWS ECS manages the deployment of those containers, monitors the performance of the underlying infrastructure, and provides a streamlined way to deploy Docker images across various instances.

Amazon Elastic Kubernetes Service (AWS EKS)

Kubernetes is an open-source orchestration engine created and popularized by Google. Kubernetes is essentially a tool that allows for deployment, management, interconnectivity, and much more concerning Docker containers and applications.

Amazon Fargate

Fargate is the AWS serverless platform. A serverless approach to containers allows users to specify what needs to be deployed and allow AWS to handle how it is deployed. In other words, Fargate lets th e user specify the Docker images to deploy and handles the distribution of these images across EC2 instances. It’s important to note that extensive engineering goes into making sure that the hardware under the software operates in a way that the AWS users are obstructed from dealing with those challenges.

How Does AWS ECS Work?

As briefly mentioned above, ECS is a Docker-based service that allows the user to manage how images are deployed to different EC2 Instances. The ECS service is separate from the EC2 service in the sense that the user must first provision the instances, and then deploy the ECS Agent that will manage containers within those instances.

As shown in the figure above, the ECS engine will embed into EC2 instances via a Docker ECS Agent. Once that is in place, ECS will prioritize the distribution of containers to the instance based on user settings. The apparent prioritization case is distributing images to instances with the least load when the image is to be deployed.

Amazon ECS - Fargate Launch

Fargate allows for serverless deployment of Docker images. The idea is that AWS will handle the infrastructure (EC2 instances) and allow the user to focus on what matters most - building and specifying applications that need to be deployed. This launch type requires the user to allocate resources to applications and set guardrails. In other words, Fargate will scale the deployment to accommodate the applications thrown at it. It’s important to keep in mind that the cost for this service can run high if the applications consume resources at a rate that wasn’t anticipated for whatever reason. Using triggers via AWS CloudWatch is critical to mitigate these issues.

Hands-On Lab Using AWS ECS

In this section, we’re going to look at a practical deployment of AWS ECS and see what the service has to offer.

Step 1 - Navigate to the ECS Console & Create a Cluster

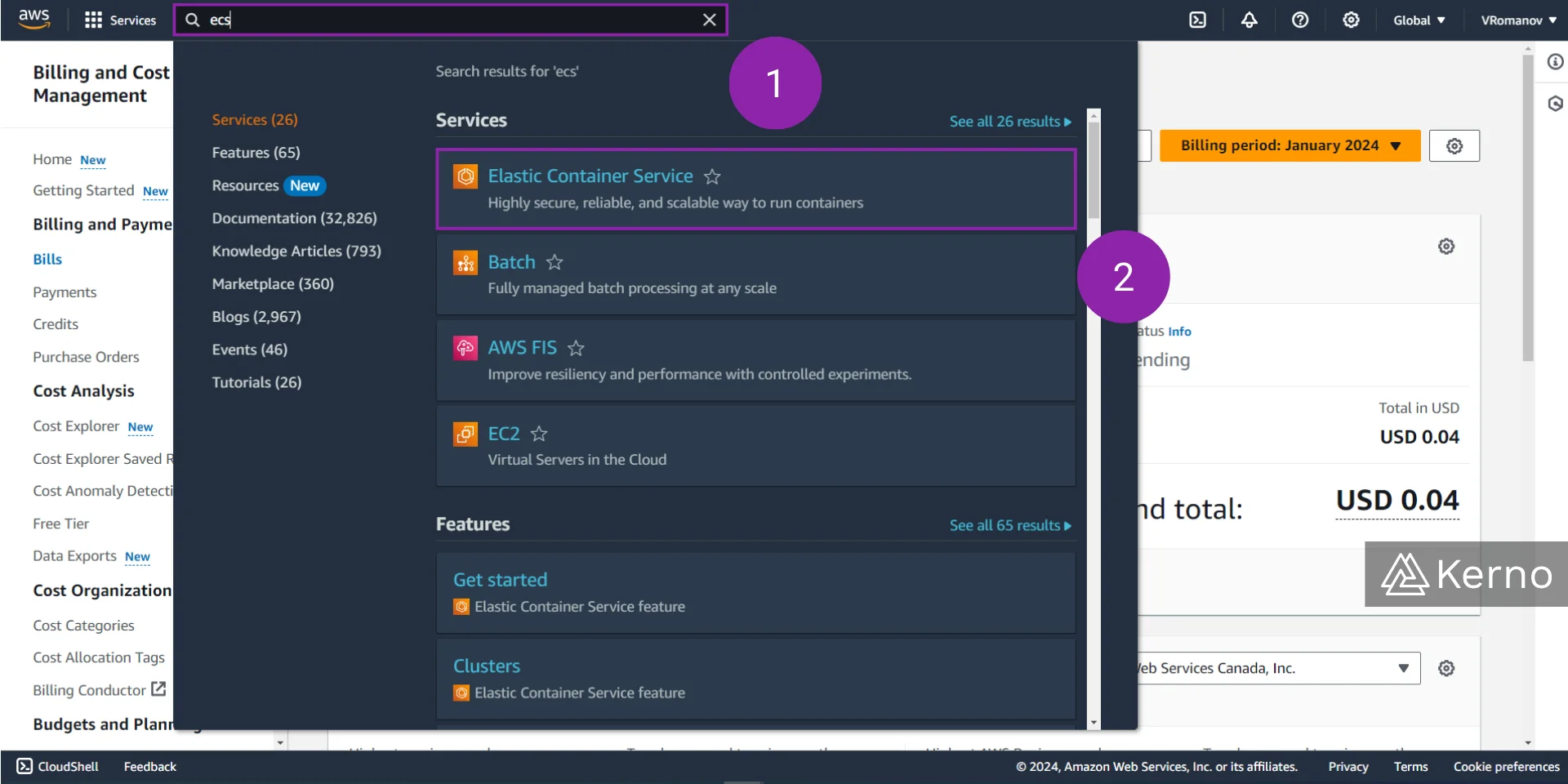

1.1 - From the AWS Console, search for “ecs.”

1.2 - From the drop-down menu, click on “Amazon Elastic Container Service.”

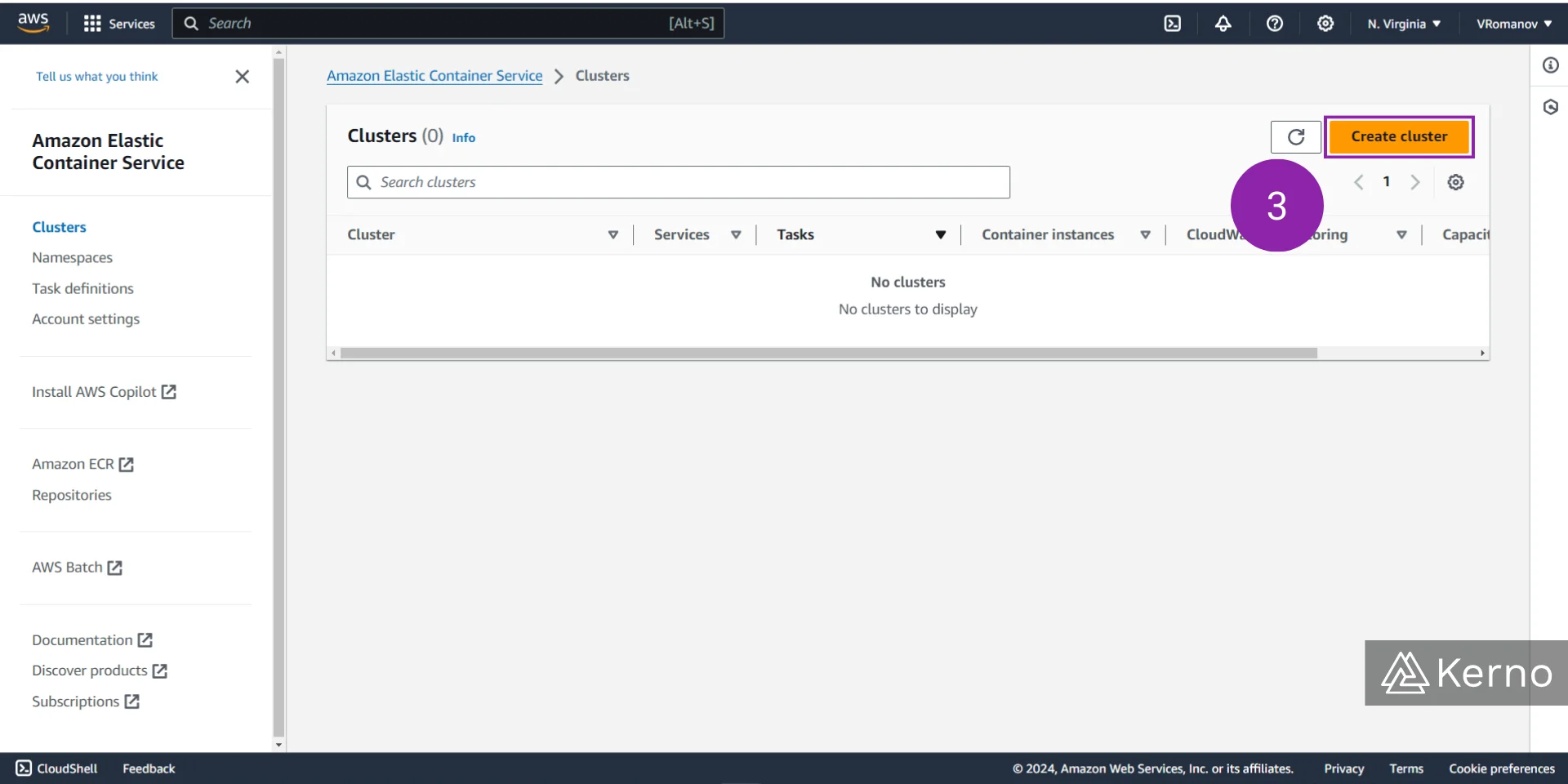

1.3 - On the page's top left side, click “Create cluster.”

Step 2 - Setup Cluster Parameters & Specify EC2 Instances to Use

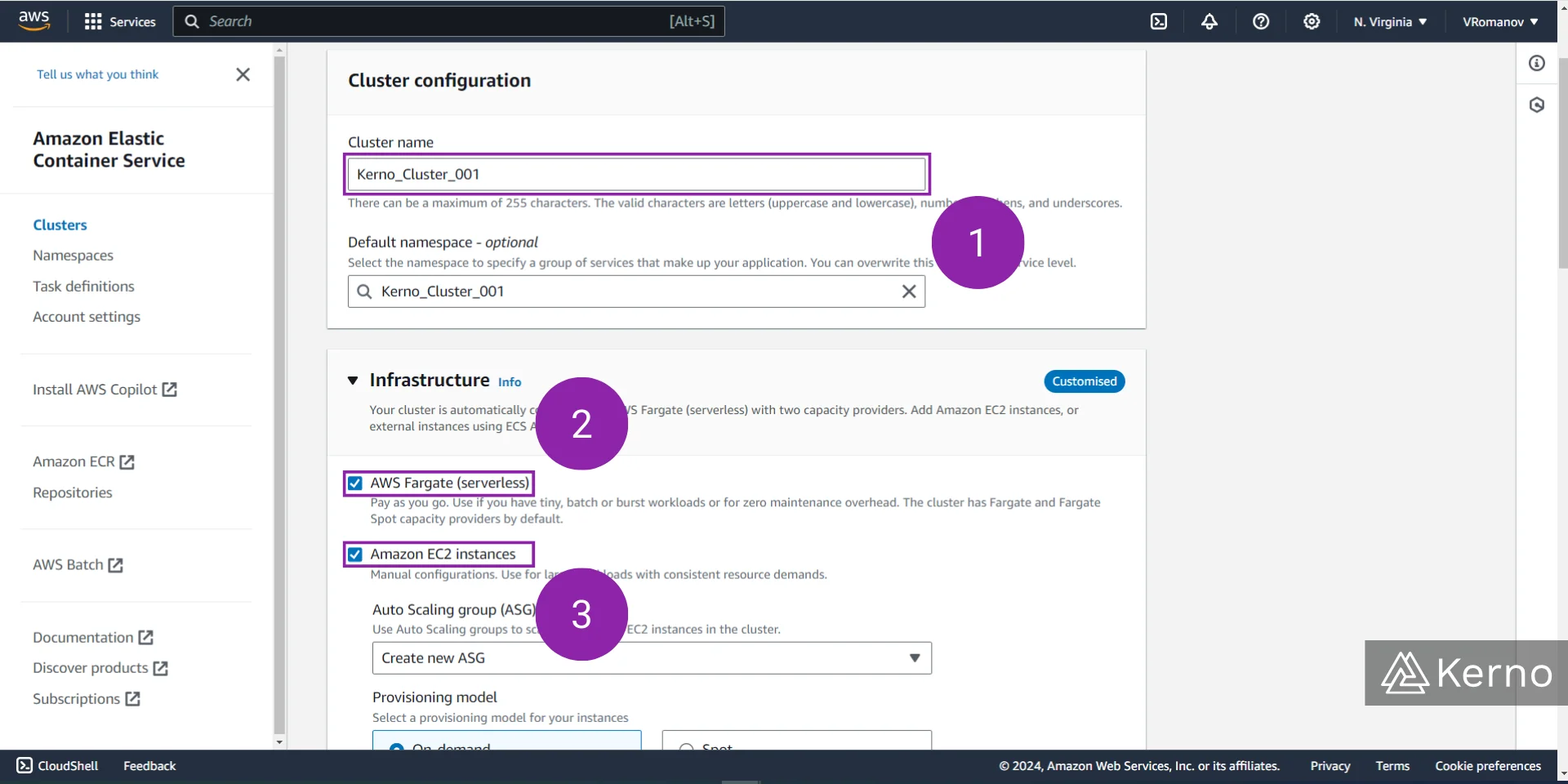

2.1 - In the “Cluster name” field, enter a unique name for your cluster.

2.2, 2.3 - In the “Infrastructure” section, select “AWS Fargate (serverless)” and “Amazon EC2 instances.” Note that your application can use either one or both of the services for deployment. In this example, we’re going to choose both.

2.4 - ECS Utilizes Auto Scaling Groups (ASG); in the field for this purpose, we’ve left the default, which allows us to create a new ASG; you can choose one you’ve created in the past.

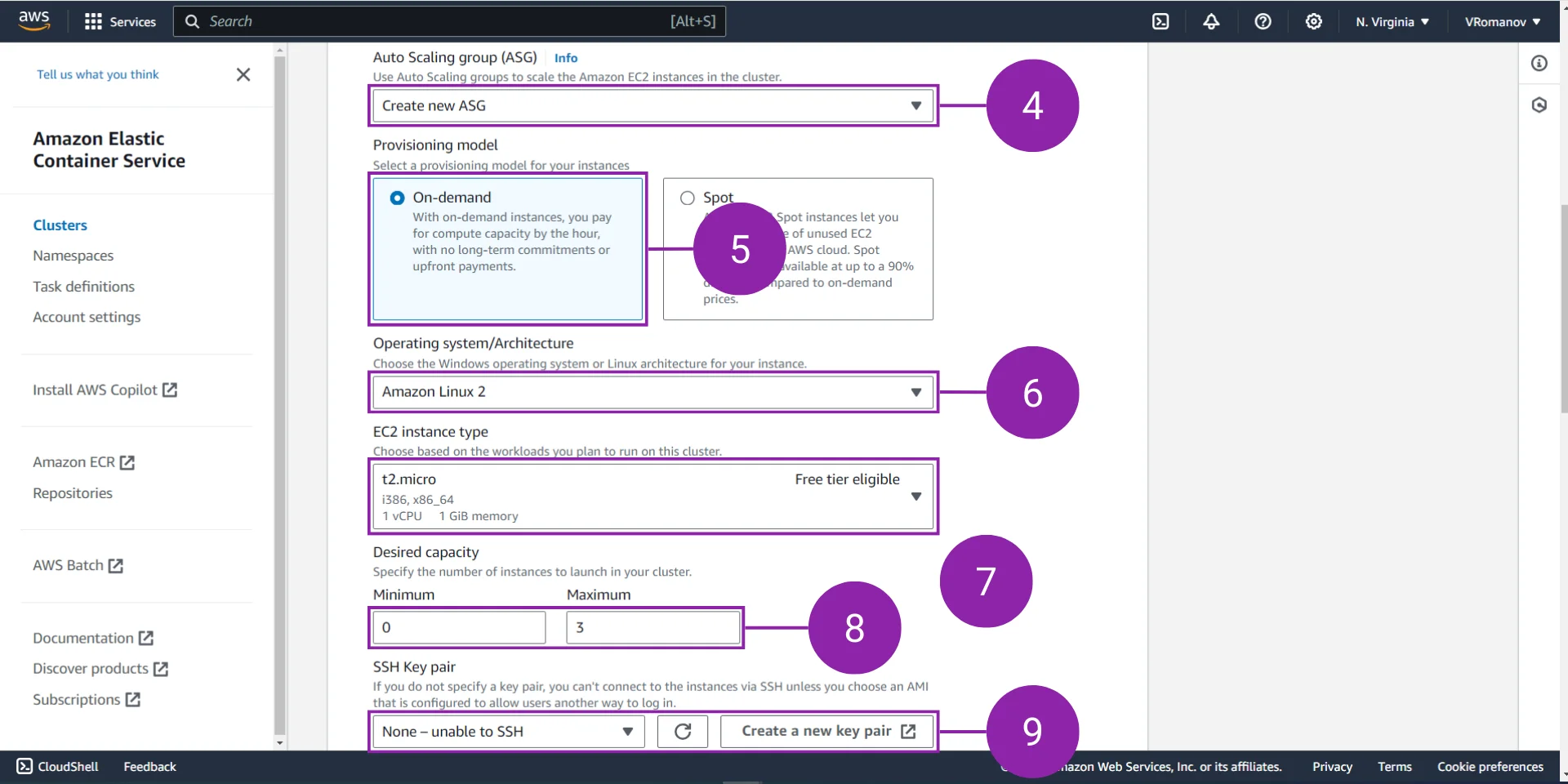

2.5 - AWS Offers various provisioning models. To keep things simple as we’ve covered this concept extensively in the EC2 guide, we’re going to leave the default, which is “On-demand.”

2.6 - From the “Operating system/Architecture” select the OS you’d like to deploy on the EC2 instances; with the default being Amazon Linux 2.

2.7 - From the “EC2 instance type” dropdown select the size and specs of an instance you’d like to provision - Note that the t2.micro can be created for no cost on the free tier.

2.8 - In the “Desired capacity” section, you’ll need to set the minimum and maximum number of instances ECS can provision. Remember that the engine will automatically allocate new instances based on the load of applications and the specs of the instances.

2.9 - In the “SSH Key pair” choose how to handle security for these instances.

Deploying ECS will require provisioning a VPC. In a separate tutorial, we’ve covered how to configure AWS VPC.

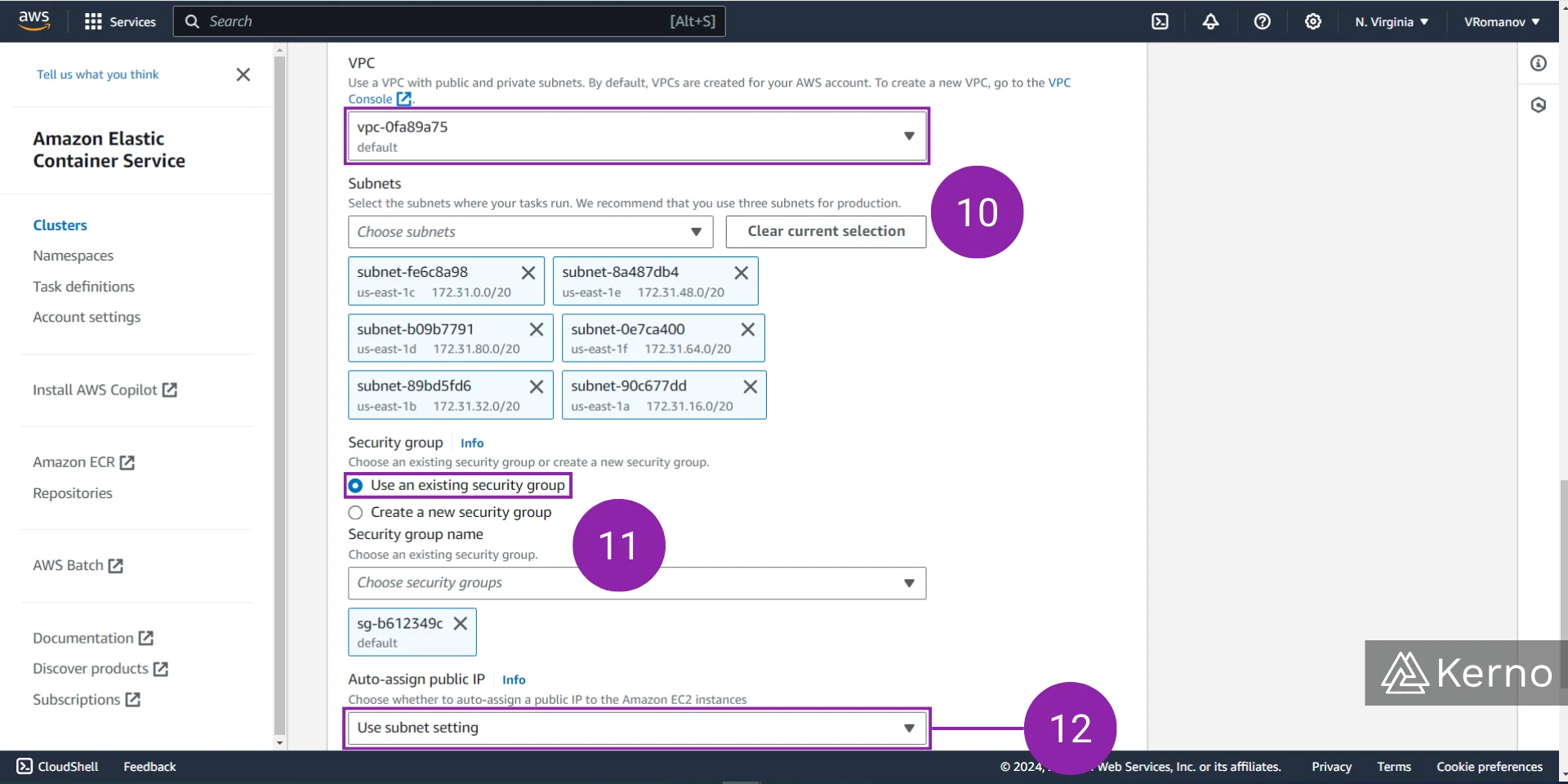

2.10 - Specify the VPC inside of which you’ll be deploying ECS.

2.11 - Specify the Security Group to use for ECS.

2.12 - Networking is critical for both the VPC and ECS. You can choose how to handle traffic into and within the VPC.

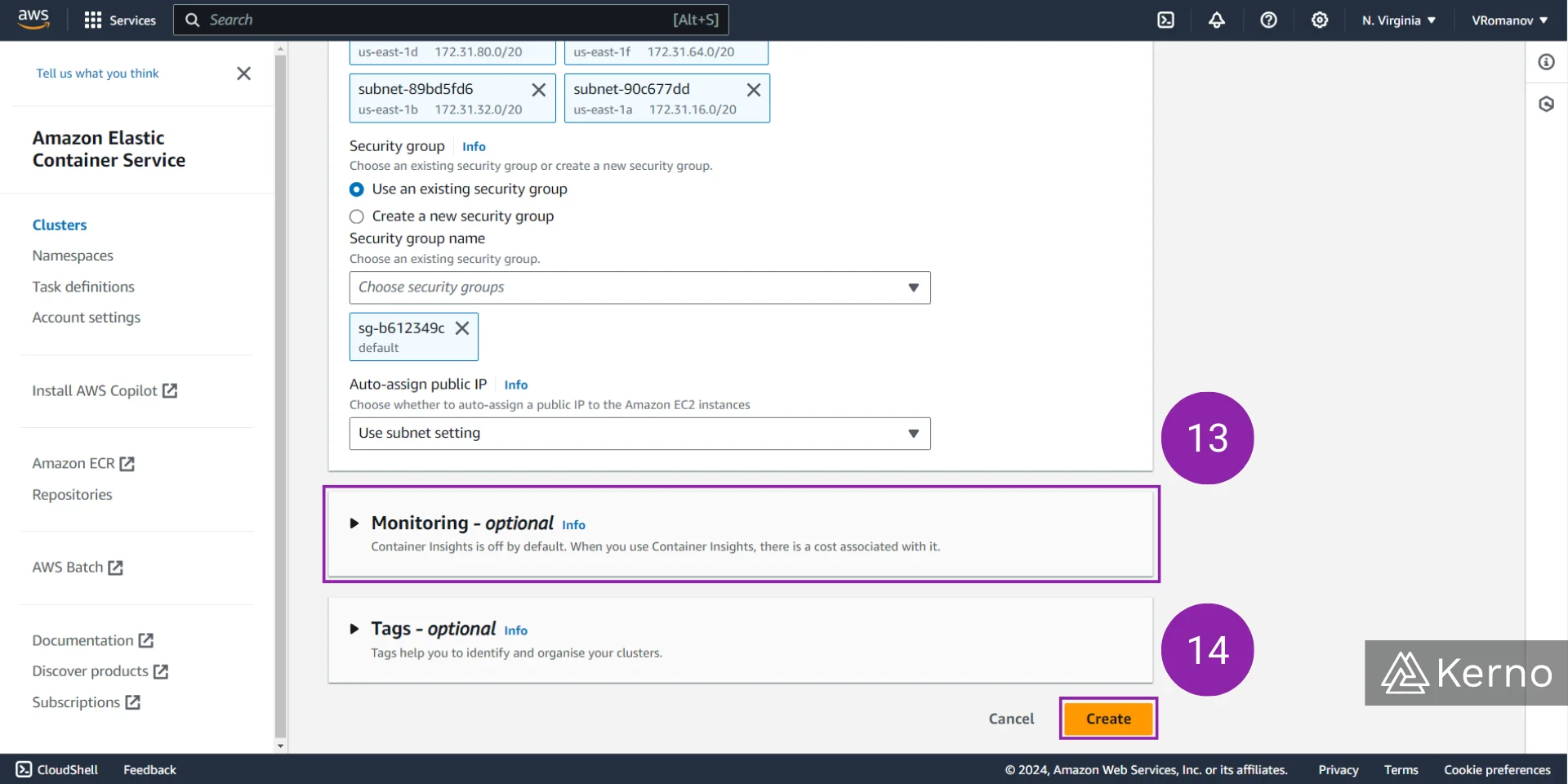

2.13 - Monitoring is offered via AWS CloudWatch. Within this section, you can choose what to log using the service.

2.14 - At the bottom of the page, click “Create” to finalize deploying a cluster.

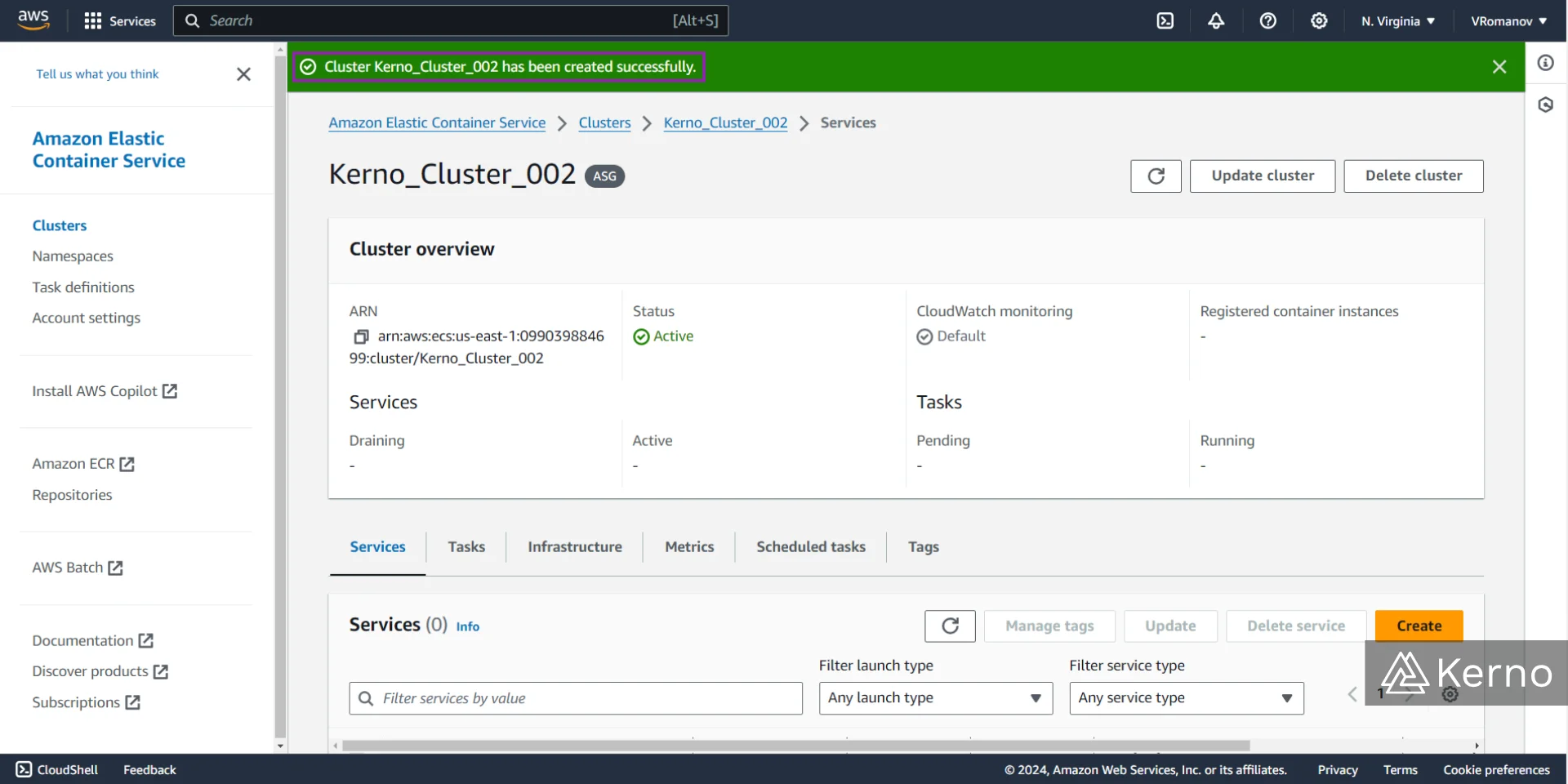

Step 3 - Review the ECS Cluster Deployment

Creating the infrastructure for the ECS Cluster we’ve specified will take a moment. AWS will create a few services, the settings we’ve reviewed in the previous section, and notify the user that they’re running. Note that many of these services have separate consoles and can be viewed in different locations across AWS.

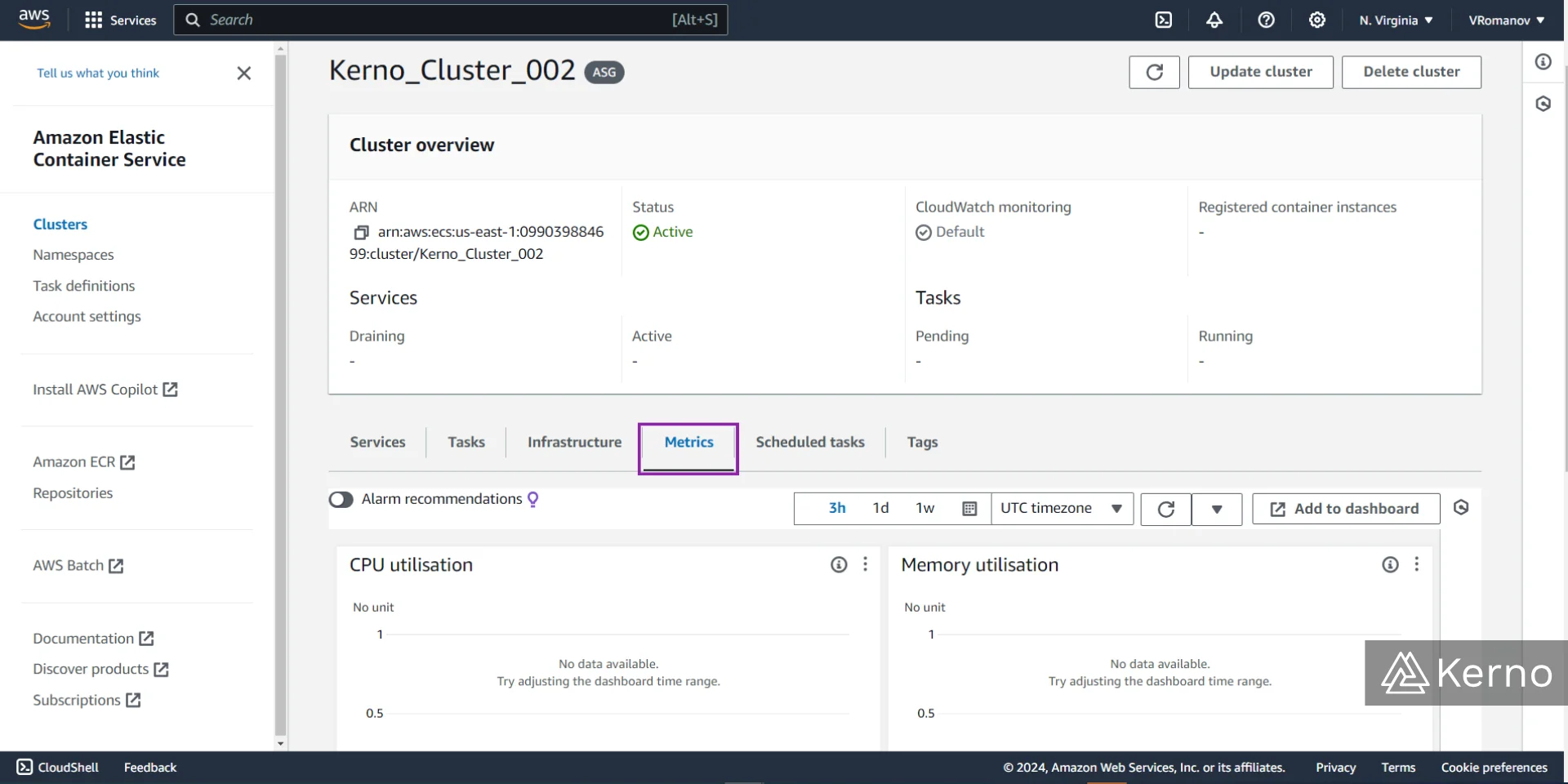

In the figure above, you’ll notice that the cluster interface will provide you with a variety of metrics that can be used to optimize your operations. The data hasn’t been populated yet, but you can understand what’s running on the cluster, the performance of those applications, and how you can tweak the infrastructure to suit your needs.

Conclusion on ECS

We’ve covered the fundamentals of Docker, Docker Images, ECS, Fargate, and more. Virtualization plays an important role in most modern software applications and systems. It allows for a scalable and cost-effective way to deploy applications across multiple platforms and hardware. ECS allows the user to eliminate the need to manage their own hardware, Operating Systems, and focus on what truly matters for their business - building software and servicing clients.